Tightened security

We regularly exchange confidential, highly sensitive data on the internet. To make these transactions one hundred percent secure, computer scientists working in the Centre for Cyber Trust project at ETH Zurich and the University of Bonn are developing innovative, trustworthy security systems—and have now scored a breakthrough in verification procedures.

Digital technologies have become an integral part of our lives. We search the internet for information, communicate by email, and interact on social media and messaging platforms. We use smartphones to do our banking and credit cards to shop online. But how do we really know who we’re dealing with in these digital spaces? This question is at the heart of the Centre for Cyber Trust project conducted at ETH Zurich and the University of Bonn.

“In the real world, we see who we’re speaking to and, when in doubt, can ask to see someone’s ID,” says David Basin, who leads the WSS-funded project with two ETH colleagues, Peter Müller and Adrian Perrig, as well as Matthew Smith from the University of Bonn. By contrast, authenticating someone’s identity is more difficult online, where information is exchanged in the form of bits, or binary digits.

To guarantee the authenticity of a sender or a website, computer scientists work with so-called protocols, which are sets of rules that govern the interactions between computers, networks or processes. “For example, e-banking systems use a cryptographic protocol known as TLS to ensure that clients can safely communicate with their bank and that data can’t be read or manipulated during transmission,” David Basin explains.

Eliminating weak links

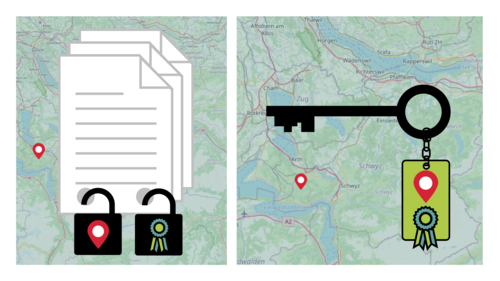

Nevertheless, hackers are still capable of compromising these protocols to steal data. That’s why researchers in the Centre for Cyber Trust project are working on techniques to ensure that protocols are fully secure. In addition to writing these protocols, it’s also necessary to rule out any weaknesses that cybercriminals could take advantage of. “There are two main causes for vulnerabilities in these systems: technical and human error,” Peter Müller explains.

While Matthew Smith in Bonn is focusing on the human side of the equation, David Basin and Peter Müller are working on excluding technical flaws. To do so, they use verification techniques: they run programs that automatically construct mathematical proofs verifying that a software program has the security properties its developers intended—protection against cyberattacks, for example. “We define the set of all the ways that two parties can run a protocol and that a third party—a cybercriminal or hacker—can intervene,” David Basin says. These formalised definitions, known as semantics in programming language theory, can then be analysed mathematically.

Vulnerable interfaces

These verification processes are time-consuming, and the security protocol itself isn’t the only thing that can cause problems: errors also occur in the code used for protocol implementation—when a protocol is integrated in a software program. “In the implementation step, things can be accidentally omitted, or problems arise that are not reflected in the protocol description itself,” Peter Müller explains. “That’s why we want to verify both the protocol and its implementation.”

The interface between these two steps has always been a weak point in proofs for whether a protocol is running exactly as it should, Müller continues. “At the interface, there were previously no tools to control that the two proofs—the proof that a protocol design is secure and the proof that the implementation is correct—fit together properly. Instead, it’s people who decide whether the protocol and implementation levels are working together error-free—which is error-prone in practice.”

Now, however, in painstaking work, the two researchers and their groups have succeeded in using mathematical descriptions to link the two layers together so closely that the interface weakness in the verification proof has been completely eliminated. “It’s a breakthrough,” David Basin says with evident satisfaction. And Peter Müller adds: “Researchers have been working on code verification for sixty years, and on protocol verification for thirty. Before us, however, no one had ever managed to link protocol verification and high-performance protocol implementation this closely.”

Error-free not good enough

In effect, linking the two levels means end-to-end verification: during the entire process, from protocol to code, each and every step has been verified by a tool. Nevertheless, there remains much to do. For one, the researchers want to automate the verification further and adapt them for more complex implementations, Peter Müller explains.

And another important issue is under investigation: although the new verification methods for ruling out errors in the protocol and code are essential for secure websites, error-free isn’t good enough, as David Basin says. “Users also need to understand and trust these security architectures.” That’s why ways of gaining this trust is the other key question the researchers are exploring.